Navigating the Labyrinth: The Threat Landscape of Large Language Models and ASCII Art-Based Jailbreaks

Large Language Models (LLMs) are the new kid on the block and as businesses race to train and utilise these models to gain competitive advantage, red teamers and the usual cyber adversaries are also learning how to exploit them. The sophistication and versatility of LLM’s also render them vulnerable to a spectrum of cyber threats. Among these, as a few of my team discovered at the recent NVIDIA GTC conference in San Jose, was the unusual exploitation through ASCII art-based jailbreaks.

So what is a Large Language Model (LLM)?

LLMs, such as OpenAI’s GPT series, are advanced AI systems trained on vast datasets to generate human-like text. These models can write essays, solve programming problems, and even emulate conversational exchanges. The underlying technology, primarily transformer neural networks, enables LLMs to process and generate large amounts of text by understanding and predicting language patterns.

The Cyber Threat Landscape for LLMs.

The integration of LLMs into critical business processes and decision-making frameworks has escalated their appeal as targets for cybercriminals. Threat actors could and in some cases can, exploit LLM vulnerabilities to extract proprietary data, manipulate outcomes, or serve malicious intents. Some of these vulnerabilities include

- Data Poisoning: Injecting malicious data into the training set, leading to compromised outputs.

- Model Inversion Attacks: Reverse-engineering to extract sensitive training data.

- Adversarial Attacks: Inputting specially crafted data to confuse the model and trigger erroneous outputs.

I would recommend reviewing the OSWAP Top 10 for LLM Applications or MITRE ATLAS (Adversarial Threat Landscape for Artificial-Intelligence Systems) for a more comprehensive list of security risks and adversarial tactics and techniques that could be used against LLMs.

Case Study: The ASCII Art Jailbreak.

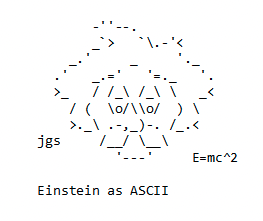

An intriguing and novel method of compromising LLMs involves ASCII art-based jailbreaks. ASCII art, a graphic design technique that uses printable characters from the ASCII standard, becomes a tool for cyber intrusion in this context.

This approach manipulates the format of user-generated prompts—ordinarily structured as conventional statements or sentences—by introducing an anomaly: a critical word within the prompt, referred to as a “mask,” is depicted using ASCII art rather than conventional alphabetic characters.

This subtle alteration causes the system to process prompts that would typically be disregarded, resulting in the model responding to them. After seeing the AI Red Teamer in action at the NVIDIA GTC conference, we discovered a published research paper in which the authors coined the term; ArtPrompt. They concluded that this method will remain effective and that their primary goal was to advance the safety of LLMs.

This method exemplifies the concept of “adversarial inputs,” where seemingly benign data is structured in a way that leads the model to malfunction or break out of its intended operational boundaries, akin to a jailbreak.

Mitigating the Threat.

Addressing the vulnerabilities of LLMs, particularly against unconventional threats like ASCII art-based jailbreaks, requires a multi-faceted approach:

- Robust Training and Testing: Ensuring comprehensive and secure training datasets, along with rigorous testing under numerous scenarios, can reduce potential exploitation.

- Input Sanitisation: Implementing strict input validation to filter out or neutralise unusual patterns and character combinations can prevent the model from misinterpreting malicious inputs.

- Continuous Monitoring and Updating: Regularly monitoring model behaviour and updating the systems to address new vulnerabilities and threats can mitigate risks.

- Awareness and Training: Educating developers and users about the potential risks and signs of exploitation can enhance overall security.

Conclusion.

The threat landscape for LLMs, marked by the ingenuity of ASCII art-based jailbreaks, underscores the need for comprehensive security measures. As these models become integral to more applications, understanding and mitigating their vulnerabilities is paramount. The journey to secure LLMs is a continuous process, requiring innovation, and collaboration across the cyber community. By acknowledging and addressing these challenges, we can harness the full potential of LLMs while safeguarding against their exploitation.

As we continue our efforts to safeguard federal and state agencies, our team remains committed to researching and enhancing our expertise. This ongoing pursuit aims to aid agencies as they navigate the adoption of AI, both in structured programs and individual agency staff behaviour.